January 29, 2020

Introduction

Since their advent 40 years ago, performance audits in the public sector have tended to focus on systems and practices. Despite intentions to produce more audits focused on whether government programs achieve their intended results, these results audits remain uncommon.

In this article, I present an approach, based on a simple model, that can help public sector auditors to plan and conduct results audits and report their findings effectively. This approach can also help identify root causes.

The Promise of Results Auditing: Still a Work in Progress

Performance audits were originally designed to examine economy, efficiency, and effectiveness (with restrictions on effectiveness in the mandate of some audit institutions). Disappointingly, the first generation of audits in the 1980s often looked like a Management 101 textbook—too focused on systems and practices, and not enough on whether the audited activities were producing results. The consensus was to shift to a results focus (which I refer to as “results audits”), but not much seemed to change in the years that followed. Audits have been still much more about whether audited activities are well managed than whether they deliver results.

Much of the early problem was that information on results was hard to obtain and to work with for the following reasons:

- The few results databases available were often not used for management decision making.

- When a database did exist, auditors faced the daunting task of first cleaning the data before they had something that could be analyzed or used as a sample frame. Or the audit required linking two data sets that had never been matched before; missing identifiers would reduce the number of successful matches.

- If sampling was required, the large sample sizes needed to obtain a representative sample allowing extrapolation were daunting.

Fortunately, the situation has improved with the digital revolution and the advent of powerful data analytics tools and artificial intelligence applications.

But, even with available data, the complexity of auditing results remains. The analytical techniques—the kind that program evaluators and statisticians use—are often new to auditors. In recent years, we have seen regression analysis and other statistical techniques used in performance audits, but the examples are still rare. And, even where auditors have the necessary statistical training, it can still be challenging to clearly explain the basis of the audit findings in the audit report or to the Public Accounts Committee.

Auditing Results Made Simpler: Modelling the Audited Activity as a Pipeline

About the Author

Neil Maxwell worked for over 30 years at the Office of the Auditor General (OAG) of Canada, concluding his career there as Assistant Auditor General from 2007 to 2015. In his final years at the OAG, he was also the Product Leader for the performance audit practice and was appointed as Commissioner of the Environment and Sustainable Development on an interim basis.

In recent years, as a CAAF Associate, Neil has contributed to course development and delivered performance audit courses in many jurisdictions. He has also contributed to several research projects, including the CAAF Discussion Paper Approaches to Audit Selection and Multi-Year Planning, for which he was the lead researcher and author.

Neil received Master of Public Administration and Bachelor of Arts (Honours, Geography) degrees from Queen’s University.

Contact the author at:

The complexity of auditing results begins at the scoping stage. Auditors often find it complicated and time-consuming to complete the logic or process models required to understand the relation of inputs, activities, outputs, and outcomes in any given public sector program. For example, at the CAAF we teach four modelling techniques for planning (logic models, management plan/do/check, process models, and issue trees), plus another four Root Cause Analysis techniques (5 whys, Fishbone diagrams, Pareto models, and cause mapping). The resulting model is often not easy to understand and hence rarely makes it into the audit report. With so much complexity, it is not easy to find the right audit scope or determine where evidence collection and analysis should focus.

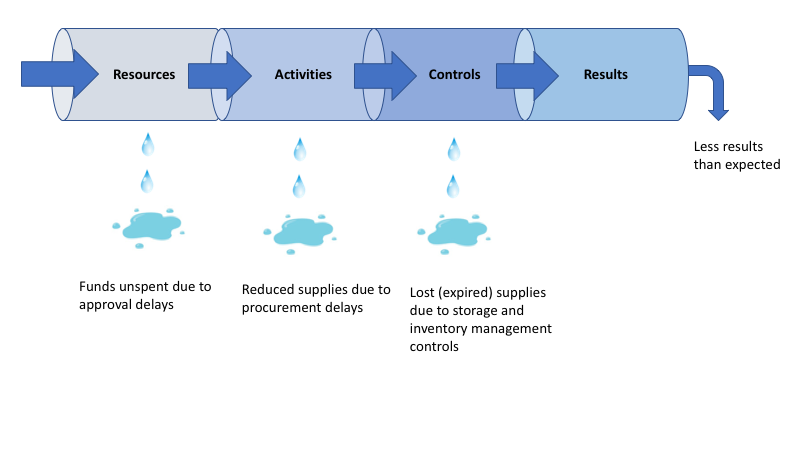

I often use the simpler approach of modelling the audited subject as a “pipeline.” Everyone understands a pipeline. Something flows in at one end and flows out the other, barring any leaks. Applying this to an audit, resources flow in one end, pumped by activities subject to management controls along the pipeline, and results flow out the other end. In theory, all the intended results emerge at the end of the pipeline, but more often some of the intended results leak out along the way because management controls are not designed or implemented well.

In a recent audit of supplies (not yet published), I suggested using the pipeline model. Parliament provides funds at one end, and users receive supplies when they require them at the other. The audit looked for leaks all along the pipeline, beginning at the input end (Figure 1). A significant portion of the money authorized by Parliament looked to be unspent because of approval delays. Then procurement delays seemed to reduce the quantity of supplies flowing through the pipeline. Losses from expired supplies in storage added to the leakage. The audit sought to quantify leaks along the pipeline and determine why controls at each stage failed.

Figure 1 – Finding Where the Results Pipeline Leaks in a Supplies Audit

Page 1 of 2

- 1

- 2